The Spanish authorities this week introduced a serious overhaul to a program by which police depend on an algorithm to determine potential repeat victims of home violence, after officers confronted questions concerning the system’s effectiveness.

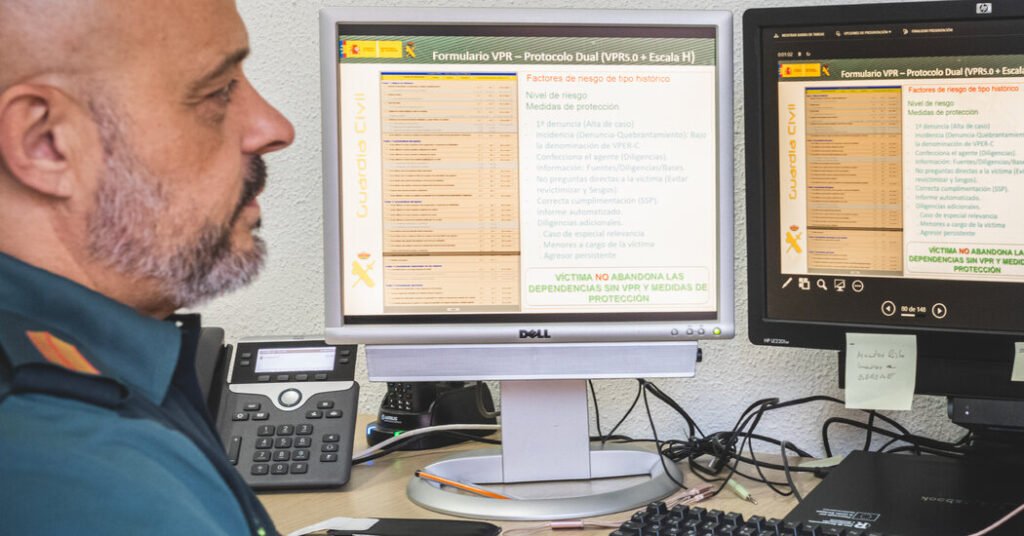

This system, VioGén, requires law enforcement officials to ask a sufferer a collection of questions. Solutions are entered right into a software program program that produces a rating — from no danger to excessive danger — meant to flag the ladies who’re most weak to repeat abuse. The rating helps decide what police safety and different companies a girl can obtain.

A New York Times investigation final 12 months discovered that the police have been extremely reliant on the expertise, virtually all the time accepting the selections made by the VioGén software program. Some ladies whom the algorithm labeled at no danger or low danger for extra hurt later skilled additional abuse, together with dozens who have been murdered, The Instances discovered.

Spanish officers mentioned the modifications introduced this week have been a part of a long-planned replace to the system, which was launched in 2007. They mentioned the software program had helped police departments with restricted assets defend weak ladies and cut back the variety of repeat assaults.

Within the up to date system, VioGén 2, the software program will not have the ability to label ladies as going through no danger. Police should additionally enter extra details about a sufferer, which officers mentioned would result in extra correct predictions.

Different modifications are meant to enhance collaboration amongst authorities companies concerned in instances of violence in opposition to ladies, together with making it simpler to share data. In some instances, victims will obtain customized safety plans.

“Machismo is knocking at our doorways and doing so with a violence not like something we’ve got seen in a very long time,” Ana Redondo, the minister of equality, mentioned at a information convention on Wednesday. “It’s not the time to take a step again. It’s time to take a leap ahead.”

Spain’s use of an algorithm to information the remedy of gender violence is a far-reaching instance of how governments are turning to algorithms to make necessary societal choices, a development that’s anticipated to develop with using synthetic intelligence. The system has been studied as a possible mannequin for governments elsewhere which can be making an attempt to fight violence in opposition to ladies.

VioGén was created with the idea that an algorithm primarily based on a mathematical mannequin can function an unbiased device to assist police discover and defend ladies who might in any other case be missed. The yes-or-no questions embody: Was a weapon used? Had been there financial issues? Has the aggressor proven controlling behaviors?

Victims categorized as larger danger obtained extra safety, together with common patrols by their residence, entry to a shelter and police monitoring of their abuser’s actions. These with decrease scores received much less help.

As of November, Spain had greater than 100,000 lively instances of ladies who had been evaluated by VioGén, with about 85 p.c of the victims categorized as going through little danger of being damage by their abuser once more. Law enforcement officials in Spain are skilled to overrule VioGén’s suggestions if proof warrants doing so, however The Instances discovered that the danger scores have been accepted about 95 p.c of the time.

Victoria Rosell, a decide in Spain and a former authorities delegate targeted on gender violence points, mentioned a interval of “self-criticism” was wanted for the federal government to enhance VioGén. She mentioned the system may very well be extra correct it if pulled data from extra authorities databases, together with well being care and schooling methods.

Natalia Morlas, president of Somos Más, a victims’ rights group, mentioned she welcomed the modifications, which she hoped would result in higher danger assessments by the police.

“Calibrating the sufferer’s danger properly is so necessary that it could possibly save lives,” Ms. Morlas mentioned. She added that it was vital to keep up shut human oversight of the system as a result of a sufferer “must be handled by individuals, not by machines.”