Within the newest spherical of machine learning benchmark outcomes from MLCommons, computer systems constructed round Nvidia’s new Blackwell GPU structure outperformed all others. However AMD’s newest spin on its Intuition GPUs, the MI325, proved a match for the Nvidia H200, the product it was meant to counter. The comparable outcomes had been totally on assessments of one of many smaller-scale large language models, Llama2 70B (for 70 billion parameters). Nevertheless, in an effort to maintain up with a quickly altering AI panorama, MLPerf added three new benchmarks to raised mirror the place machine studying is headed.

MLPerf runs benchmarking for machine studying techniques in an effort to supply an apples-to-apples comparability between laptop techniques. Submitters use their very own software program and {hardware}, however the underlying neural networks have to be the identical. There are a complete of 11 benchmarks for servers now, with three added this 12 months.

It has been “exhausting to maintain up with the fast improvement of the sector,” says Miro Hodak, the cochair of MLPerf Inference. ChatGPT appeared solely in late 2022, OpenAI unveiled its first massive language mannequin (LLM) that may cause via duties final September, and LLMs have grown exponentially—GPT3 had 175 billion parameters, whereas GPT4 is believed to have practically 2 trillion. Because of the breakneck innovation, “we’ve elevated the tempo of getting new benchmarks into the sector,” says Hodak.

The brand new benchmarks embrace two LLMs. The favored and comparatively compact Llama2 70B is already a longtime MLPerf benchmark, however the consortium wished one thing that mimicked the responsiveness individuals are anticipating of chatbots right now. So the brand new benchmark “Llama2-70B Interactive” tightens the necessities. Computer systems should produce not less than 25 tokens per second underneath any circumstance and can’t take greater than 450 milliseconds to start a solution.

Seeing the rise of “agentic AI”—networks that may cause via advanced duties—MLPerf sought to check an LLM that will have a few of the traits wanted for that. They selected Llama3.1 405B for the job. That LLM has what’s known as a large context window. That’s a measure of how a lot info—paperwork, samples of code, et cetera—it could possibly soak up without delay. For Llama3.1 405B, that’s 128,000 tokens, greater than 30 occasions as a lot as Llama2 70B.

The ultimate new benchmark, known as RGAT, is what’s known as a graph consideration community. It acts to categorise info in a community. For instance, the dataset used to check RGAT consists of scientific papers, which all have relationships between authors, establishments, and fields of research, making up 2 terabytes of information. RGAT should classify the papers into just below 3,000 matters.

Blackwell, Intuition Outcomes

Nvidia continued its domination of MLPerf benchmarks via its personal submissions and people of some 15 companions, resembling Dell, Google, and Supermicro. Each its first- and second-generation Hopper structure GPUs—the H100 and the memory-enhanced H200—made sturdy showings. “We had been capable of get one other 60 p.c efficiency during the last 12 months” from Hopper, which went into manufacturing in 2022, says Dave Salvator, director of accelerated computing merchandise at Nvidia. “It nonetheless has some headroom by way of efficiency.”

Nevertheless it was Nvidia’s Blackwell structure GPU, the B200, that actually dominated. “The one factor quicker than Hopper is Blackwell,” says Salvator. The B200 packs in 36 p.c extra high-bandwidth reminiscence than the H200, however, much more vital, it could possibly carry out key machine studying math utilizing numbers with a precision as little as 4 bits as an alternative of the 8 bits Hopper pioneered. Decrease-precision compute models are smaller, so fitter on the GPU, which ends up in quicker AI computing.

Within the Llama3.1 405B benchmark, an eight-B200 system from Supermicro delivered practically 4 occasions the tokens per second of an eight-H200 system by Cisco. And the identical Supermicro system was thrice as quick because the quickest H200 laptop on the interactive model of Llama2 70B.

Nvidia used its mixture of Blackwell GPUs and Grace CPU, known as GB200, to exhibit how effectively its NVL72 information hyperlinks can combine a number of servers in a rack, so that they carry out as in the event that they had been one large GPU. In an unverified outcome the corporate shared with reporters, a full rack of GB200-based computer systems delivers 869,200 tokens per second on Llama2 70B. The quickest system reported on this spherical of MLPerf was an Nvidia B200 server that delivered 98,443 tokens per second.

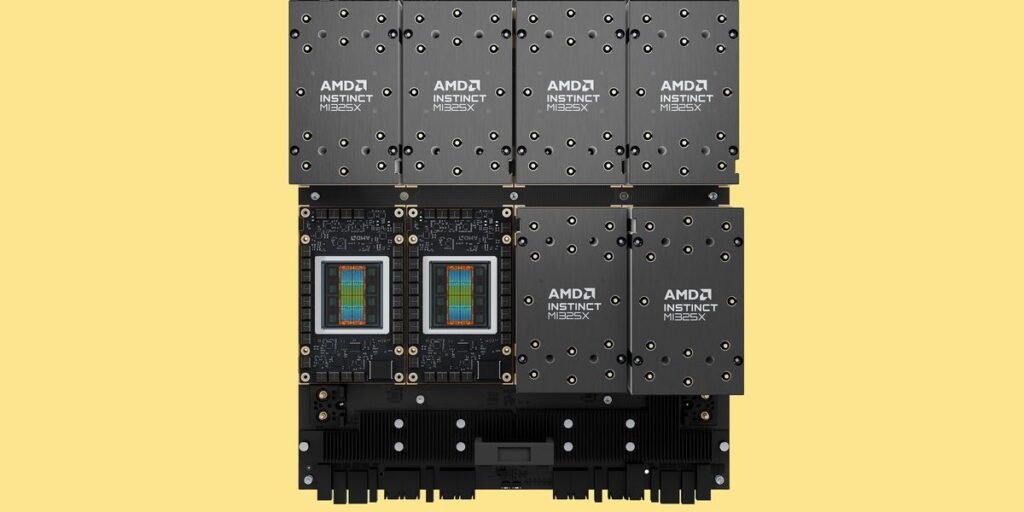

AMDis positioning its newest Intuition GPU, the MI325X, as offering efficiency aggressive with Nvidia’s H200. MI325X has the identical structure as its predecessor, MI300, however it provides much more high-bandwidth reminiscence and reminiscence bandwidth—256 gigabytes and 6 terabytes per second (a 33 p.c and 13 p.c increase, respectively).

Including extra reminiscence is a play to deal with bigger and bigger LLMs. “Bigger fashions are capable of benefit from these GPUs as a result of the mannequin can slot in a single GPU or a single server,” says Mahesh Balasubramanian, director of data-center GPU advertising at AMD. “So that you don’t must have that communication overhead of going from one GPU to a different GPU or one server to a different server. If you take out these communications, your latency improves fairly a bit.” AMD was capable of benefit from the additional reminiscence via software program optimization to spice up the inference pace of DeepSeek-R1 eightfold.

On the Llama2 70B check, an eight-GPU MI325X computer systems got here inside 3 to 7 p.c the pace of a equally tricked-out H200-based system. And on picture technology the MI325X system was inside 10 p.c of the Nvidia H200 laptop.

AMD’s different noteworthy mark this spherical was from its associate, Mangoboost, which confirmed practically fourfold efficiency on the Llama2 70B check by doing the computation throughout 4 computer systems.

Intel has traditionally put forth CPU-only techniques within the inference competitors to indicate that for some workloads you don’t actually need a GPU. This time round noticed the primary information from Intel’s Xeon 6 chips, which had been previously often known as Granite Rapids and are made utilizing Intel’s 3-nanometer process. At 40,285 samples per second, one of the best image-recognition outcomes for a dual-Xeon 6 laptop was about one-third the efficiency of a Cisco laptop with two Nvidia H100s.

In contrast with Xeon 5 outcomes from October 2024, the brand new CPU gives about an 80 p.c increase on that benchmark and an excellent greater increase on object detection and medical imaging. Because it first began submitting Xeon ends in 2021 (the Xeon 3), the corporate has achieved an elevenfold increase in efficiency on Resnet.

For now, it appears Intel has give up the sector within the AI accelerator-chip battle. Its various to the Nvidia H100, Gaudi 3, didn’t make an look within the new MLPerf outcomes, nor in model 4.1, launched final October. Gaudi 3 obtained a later-than-planned launch as a result of its software was not ready. Within the opening remarks at Intel Vision 2025, the corporate’s invite-only buyer convention, newly minted CEO Lip-Bu Tan appeared to apologize for Intel’s AI efforts. “I’m not pleased with our present place,” he told attendees. “You’re not comfortable both. I hear you loud and clear. We’re working towards a aggressive system. It gained’t occur in a single day, however we’ll get there for you.”

Google’sTPU v6e chip additionally made a exhibiting, although the outcomes had been restricted to the image-generation activity. At 5.48 queries per second, the 4-TPU system noticed a 2.5-times increase over an identical laptop utilizing its predecessor TPU v5e within the October 2024 outcomes. Even so, 5.48 queries per second was roughly in keeping with a equally sized Lenovo laptop utilizing Nvidia H100s.

This submit was corrected on 2 April 2025 to provide the fitting worth for high-bandwidth reminiscence within the MI325X.

From Your Website Articles

Associated Articles Across the Internet