Expertise reporter

Getty Photos

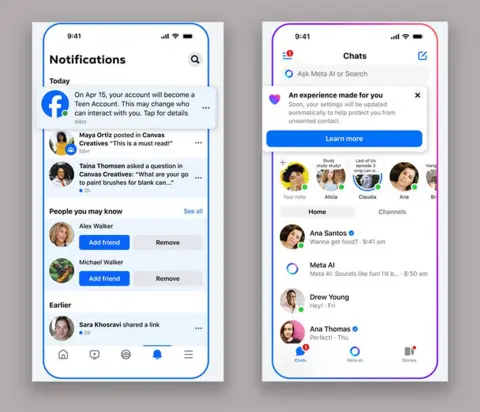

Getty PhotosMeta is increasing Teen Accounts – what it considers its age-appropriate expertise for below 18s – to Fb and Messenger.

The system entails placing youthful teenagers on the platforms into extra restricted settings by default, with parental permission required with a purpose to dwell stream or flip off picture protections for messages.

It was first introduced last September on Instagram, which Meta says “basically modified the expertise for teenagers” on the platform.

However campaigners say it is unclear what distinction Teen Accounts has truly made.

“Eight months after Meta rolled out Teen Accounts on Instagram, we have had silence from Mark Zuckerberg about whether or not this has truly been efficient and even what delicate content material it truly tackles,” mentioned Andy Burrows, chief govt of the Molly Rose Basis.

He added it was “appalling” that folks nonetheless didn’t know whether or not the settings prevented their kids being “algorithmically advisable” inappropriate or dangerous content material.

Matthew Sowemimo, affiliate head of coverage for youngster security on-line on the NSPCC, mentioned Meta’s modifications “should be mixed with proactive measures so harmful content material does not proliferate on Instagram, Fb and Messenger within the first place”.

However Drew Benvie, chief govt of social media consultancy Battenhall, mentioned it was a step in the precise path.

“For as soon as, massive social are combating for the management place not for essentially the most extremely engaged teen consumer base, however for the most secure,” he mentioned.

Nevertheless he additionally pointed on the market was a danger, as with all platforms, that teenagers might “discover a means round security settings.”

The expanded roll-out of Teen Accounts is starting within the UK, US, Australia and Canada from Tuesday.

Corporations that present companies standard with kids have confronted strain to introduce parental controls or security mechanisms to safeguard their experiences.

Within the UK, additionally they face authorized necessities to stop kids from encountering dangerous and unlawful content material on their platforms, below the On-line Security Act.

Roblox lately enabled mother and father to block specific games or experiences on the hugely popular platform as a part of its suite of controls.

What are Teen Accounts?

How Teen Accounts work rely upon the self-declared age of the consumer.

These aged 16 to 18 will be capable of toggle off default security settings like having their account set to personal.

However 13 to fifteen yr olds should receive parental permission to show off such settings – which may solely be completed by including a guardian or guardian to their account.

Meta says it has moved not less than 54 million teenagers globally into teen accounts since they have been launched in September.

It says that 97% of 13 to fifteen yr olds have additionally stored its built-in restrictions.

The system depends on customers being truthful about their age once they arrange accounts – with Meta utilizing strategies similar to video selfies to confirm their info.

It mentioned in 2024 it could start utilizing synthetic intelligence (AI) to determine teenagers who is likely to be mendacity about their age with a purpose to place them again into Teen Accounts.

Findings revealed by the UK media regulator Ofcom in November 2024 steered that 22% of eight to 17 yr olds lie that they’re 18 or over on social media apps.

Some youngsters advised the BBC it was still “so easy” to lie about their age on platforms.

Meta

MetaIn coming months, youthful teenagers may even want parental consent to go dwell on Instagram or flip off nudity safety – which blurs suspected nude photos in direct messages.

Issues over kids and youngsters receiving undesirable nude or sexual photos, or feeling pressured to share them in potential sextortion scams, has prompted requires Meta to take harder motion.

Prof Sonia Livingstone, director of the Digital Futures for Kids centre, mentioned Meta’s enlargement of Teen Accounts could also be a welcome transfer amid “a rising want from mother and father and kids for age-appropriate social media”.

However she mentioned questions remained over the corporate’s total protections for younger individuals from on-line harms, “in addition to from its personal data-driven and extremely commercialised practices”.

“Meta should be accountable for its results on younger individuals whether or not or not they use a teen account,” she added.

Mr Sowewimo of the NSPCC mentioned it was essential that accountability for protecting kids protected on-line, by way of security controls, didn’t fall to oldsters and kids themselves.

“Finally, tech corporations should be held liable for defending kids on their platforms and Ofcom wants to carry them to account for his or her failures.”