This submit initially appeared on Recode China AI.

For greater than a decade, Nvidia’s chips have been the beating coronary heart of China’s AI ecosystem. Its GPUs powered search engines, video apps, smartphones, electric vehicles, and the present wave of generative AI fashions. Whilst Washington tightened export guidelines for superior AI chips, Chinese language firms saved settling for and shopping for “China-only” Nvidia chips stripped of their most superior options—H800, A800, and H20.

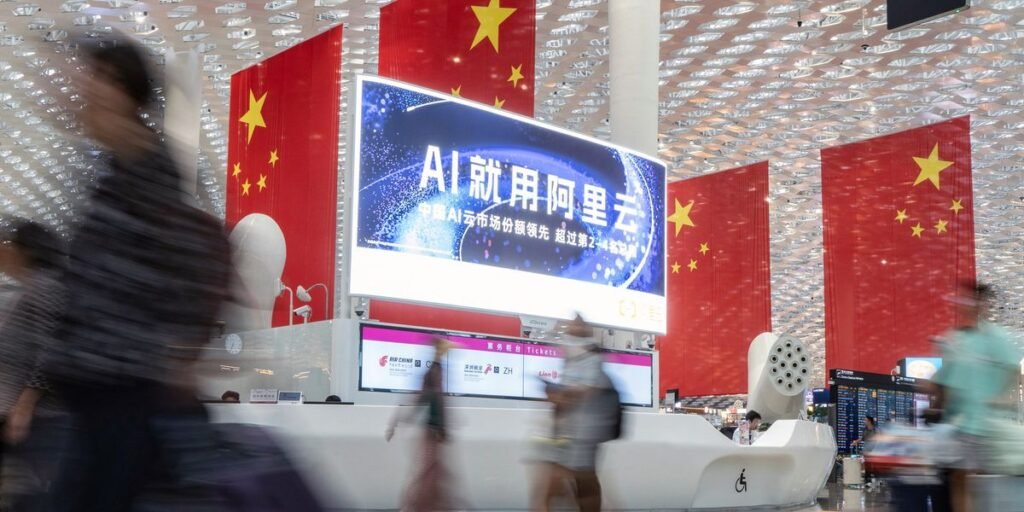

However by 2025, endurance in Beijing had seemingly snapped. State media started labeling Nvidia’s China-compliant H20 as unsafe and possibly compromised with hidden “backdoors.” Regulators summoned firm executives for questioning, whereas stories from The Monetary Instances surfaced that tech firms like Alibaba and ByteDance had been quietly told to cancel new Nvidia GPU orders. The Chinese language AI startup DeepSeek additionally signaled in August that its subsequent mannequin will likely be designed to run on China’s “next-generation” home AI chips.

The message was clear: China may not guess its AI future on an U.S. provider. If Nvidia wouldn’t—or couldn’t—promote its greatest {hardware} in China, home alternate options should fill the void by designing specialised chips for each AI coaching (constructing fashions) and AI inference (working them).

That’s tough—the truth is, some say it’s unattainable. Nvidia’s chips set the worldwide benchmark for AI computing energy. Matching them requires not simply uncooked silicon efficiency however reminiscence, interconnection bandwidth, software program ecosystems, and above all, manufacturing capability at scale.

Nonetheless, a number of contenders have emerged as China’s greatest hope: Huawei, Alibaba, Baidu, and Cambricon. Every tells a distinct story about China’s bid to reinvent its AI hardware stack.

Huawei’s AI Chips Are within the Lead

Huawei is betting on rack-scale supercomputing clusters that pool hundreds of chips collectively for enormous positive factors in computing energy. VCG/Getty Pictures

If Nvidia is out, Huawei, considered one of China’s largest tech firms, seems just like the pure substitute. Its Ascend line of AI chips has matured below the U.S. sanctions, and in September 2025 the corporate laid out a multi-year public roadmap:

- Ascend 950, anticipated in 2026 with a efficiency goal of 1 petaflop within the low-precision FP8 format that’s generally utilized in AI chips. It would have 128 to 144 gigabytes of on-chip reminiscence, and interconnect bandwidths (a measure of how briskly it strikes information between parts) of as much as 2 terabytes per second.

- Ascend 960, anticipated in 2027, is projected to double the 950’s capabilities.

- Ascend 970 is additional down the road, and guarantees important leaps in each compute energy and reminiscence bandwidth.

The present providing is the Ascend 910B, launched after U.S. sanctions minimize Huawei off from world suppliers. Roughly akin to the A100, Nvidia’s high chip in 2020, it grew to become the de facto choice for firms who couldn’t get Nvidia’s GPUs. One Huawei official even claimed the 910B outperformed the A100 by round 20 % in some coaching duties in 2024. However the chip nonetheless depends on an older kind of high-speed memory (HBM2E), and may’t match Nvidia’s H20: It holds a few third much less information in reminiscence and transfers information between chips about 40 % extra slowly.

The corporate’s newest reply is the 910C, a dual-chiplet design that fuses two 910Bs. In concept, it will probably method the efficiency of Nvidia’s H100 chip (Nvidia’s flagship chip till 2024); Huawei showcased a 384-chip Atlas 900 A3 SuperPoD cluster that reached roughly 300 Pflops of compute, implying that every 910C can ship slightly below 800 teraflops when performing calculations within the FP16 format. That’s nonetheless shy of the H100’s roughly 2,000 Tflops, nevertheless it’s sufficient to coach large-scale fashions if deployed at scale. In truth, Huawei has detailed how they used Ascend AI chips to coach DeepSeek-like fashions.

To handle the efficiency hole on the single-chip degree, Huawei is betting on rack-scale supercomputing clusters that pool hundreds of chips collectively for enormous positive factors in computing energy. Constructing on its Atlas 900 A3 SuperPoD, the corporate plans to launch the Atlas 950 SuperPoD in 2026, linking 8,192 Ascend chips to ship 8 exaflops of FP8 efficiency, backed by 1,152 TB of reminiscence and 16.3 petabytes per second of interconnect bandwidth. The cluster will span a footprint bigger than two full basketball courts. Wanting additional forward, Huawei’s Atlas 960 SuperPoD is ready to scale as much as 15,488 Ascend chips.

{Hardware} isn’t Huawei’s solely play. Its MindSpore deep learning framework and lower-level CANN software program are designed to lock clients into its ecosystem, providing a home different to PyTorch (a well-liked framework from Meta) and CUDA (Nvidia’s platform for programming GPUs) respectively.

State-backed corporations and U.S.-sanctioned firms like iFlytek, 360, and SenseTime have already signed on as Huawei shoppers. The Chinese language tech giants ByteDance and Baidu additionally ordered small batches of chips for trial.

But Huawei isn’t an computerized winner. Chinese language telecom operators reminiscent of China Mobile and Unicom, that are additionally chargeable for constructing China’s data centers, stay cautious of Huawei’s affect. They typically favor to combine GPUs and AI chips from totally different suppliers slightly than absolutely decide to Huawei. Large internet platforms, in the meantime, fear that partnering too carefully may hand Huawei leverage over their very own intellectual property.

Even so, Huawei is best positioned than ever to tackle Nvidia.

Alibaba Pushes AI Chips to Defend Its Cloud Enterprise

Alibaba Cloud’s enterprise will depend on dependable entry to training-grade AI chips. So it’s making its personal. Solar Pengxiong/VCG/Getty Pictures

Alibaba Cloud’s enterprise will depend on dependable entry to training-grade AI chips. So it’s making its personal. Solar Pengxiong/VCG/Getty Pictures

Alibaba’s chip unit, T-Head, was based in 2018 with modest ambitions round open-source RISC-V processors and information middle servers. Immediately, it’s rising as considered one of China’s most aggressive bids to compete with Nvidia.

T-Head’s first AI chip is the Hanguang 800 chip, an environment friendly chip designed for AI inference that was introduced in 2019; it’s capable of course of 78,000 pictures per second and optimize advice algorithms and large language models (LLMs). Constructed on a 12-nanometer course of with round 17 billion transistors, the chip can carry out as much as 820 trillion operations per second (TOPS) and entry its reminiscence at speeds of round 512 GB per second.

However its newest design—the PPU chip—is one thing else solely. Constructed with 96 GB of high-bandwidth reminiscence and assist for high-speed PCIe 5.0 connections, the PPU is pitched as a direct rival to Nvidia’s H20.

Throughout a state-backed television program that includes a China Unicom information middle, the PPU was offered as able to rivaling Nvidia’s H20. Reviews recommend this information middle runs over 16,000 PPUs out of twenty-two,000 chips in whole. The Info additionally reported that Alibaba has been using its AI chips to coach LLMs.

In addition to chips, Alibaba Cloud currently additionally upgraded its supernode server, named Panjiu, which now options 128 AI chips per rack, modular design for simple upgrades, and absolutely liquid cooling.

For Alibaba, the motivation is as a lot about cloud dominance as nationwide coverage. Its Alibaba Cloud enterprise will depend on dependable entry to training-grade chips. By making its personal silicon aggressive with Nvidia’s, Alibaba retains its infrastructure roadmap below its personal management.

Baidu’s Large Chip Reveal in 2025

At a current developer convention, Baidu unveiled a 30,000-chip cluster powered by its third-generation P800 processors.Qilai Shen/Bloomberg/Getty Pictures

At a current developer convention, Baidu unveiled a 30,000-chip cluster powered by its third-generation P800 processors.Qilai Shen/Bloomberg/Getty Pictures

Baidu’s chip story started lengthy earlier than right this moment’s AI frenzy. As early as 2011, the search big was experimenting with field-programmable gate arrays (FPGAs) to speed up its deep studying workloads for search and promoting. That inner mission later grew into Kunlun.

The primary era arrived in 2018. Kunlun 1 was constructed on Samsung’s 14-nm course of, and delivered round 260 TOPS with a peak reminiscence bandwidth of 512 GB per second. Three years later got here Kunlun 2, a modest improve. Fabricated on a 7-nm node, it pushed efficiency to 256 TOPS for low-precision INT8 calculations and 128 Tflops for FP16, all whereas lowering energy to about 120 watts. Baidu aimed this second era much less at coaching and extra at inference-heavy duties reminiscent of its Apollo autonomous cars and Baidu AI Cloud providers. Additionally in 2021, Baidu spun off Kunlun into an unbiased firm known as Kunlunxin, which was then valued at US $2 billion.

For years, little surfaced about Kunlun’s progress. However that modified dramatically in 2025. At its developer convention, Baidu unveiled a 30,000-chip cluster powered by its third-generation P800 processors. Every P800 chip, based on analysis by Guosen Securities, reaches roughly 345 Tflops at FP16, placing it in the identical degree as Huawei’s 910B and Nvidia’s A100. Its interconnect bandwidth is reportedly near Nvidia’s H20. Baidu pitched the system as able to coaching “DeepSeek-like” fashions with a whole lot of billions of parameters. Baidu’s newest multimodal fashions, the Qianfan-VL household of fashions with 3 billion, 8 billion, and 70 billion parameters, had been all educated on its Kunlun P800 chips.

Kunlun’s ambitions prolong past Baidu’s inner calls for. This 12 months, Kunlun chips secured orders value over 1 billion yuan (about $139 million) for China Cell’s AI tasks. That information helped restore investor confidence: Baidu’s inventory is up 64 % this 12 months, with the Kunlun reveal enjoying a central function in that rise.

Simply right this moment, Baidu announced its roadmap for its AI chips, promising to roll out a brand new product yearly for the subsequent 5 years. In 2026, the corporate will launch the M100, optimized for large-scale inference, and in 2027 the M300 will arrive, optimized for coaching and inference of large multimodal fashions. Baidu hasn’t but launched particulars in regards to the chips’ parameters.

Nonetheless, challenges loom. Samsung has been Baidu’s foundry accomplice from day one, producing Kunlun chips on superior course of nodes. But stories from Seoul recommend Samsung has paused manufacturing of Baidu’s 4-nm designs.

Cambricon’s Chip Strikes Make Waves within the Stock Market

![]() Cambricon struggled within the early 2020s, with chips just like the MLU 290 that couldn’t compete with Nvidia chips. CFOTO/Future Publishing/Getty Pictures

Cambricon struggled within the early 2020s, with chips just like the MLU 290 that couldn’t compete with Nvidia chips. CFOTO/Future Publishing/Getty Pictures

The chip firm Cambricon might be the very best performing publicly traded firm on China’s home inventory market. Over the previous 12 months, Cambricon’s share worth has jumped almost 500 %.

The corporate was formally spun out of the Chinese language Academy of Sciences in 2016, however its roots stretch again to a 2008 analysis program centered on brain-inspired processors for deep studying. By the mid-2010s, the founders believed AI-specific chips had been the longer term.

In its early years, Cambricon centered on accelerators known as neural processing items (NPUs) for each mobile devices and servers. Huawei was an important first buyer, licensing Cambricon’s designs for its Kirin cellular processors. However as Huawei pivoted to develop its personal chips, Cambricon misplaced a flagship accomplice, forcing it to increase rapidly into edge and cloud accelerators. Backing from Alibaba, Lenovo, iFlytek, and main state-linked funds helped push Cambricon’s valuation to $2.5 billion by 2018 and ultimately touchdown it on Shanghai’s Nasdaq-like STAR Market in 2020.

The following few years had been tough. Revenues fell, buyers pulled again, and the corporate bled money whereas struggling to maintain up with Nvidia’s breakneck tempo. For some time, Cambricon seemed like one other cautionary story of Chinese language semiconductor ambition. However by late 2024, fortunes started to vary. The corporate returned to profitability, thanks largely to its latest MLU sequence of chips.

That product line has steadily matured. The MLU 290, constructed on a 7-nm course of with 46 billion transistors, was designed for hybrid coaching and inference duties, with interconnect know-how that would scale to clusters of greater than 1,000 chips. The follow-up MLU 370, the final model earlier than Cambricon was sanctioned by the United States authorities in 2022, can attain 96 Tflops at FP16.

Cambricon’s actual deal got here with the MLU 590 in 2023. The 590 was constructed on 7-nm and delivered peak efficiency of 345 Tflops at FP16, with some stories suggesting it may even surpass Nvidia’s H20 in sure situations. Importantly, it launched assist for less-precise information codecs like FP8, which eased reminiscence bandwidth stress and boosted effectivity. This chip didn’t simply mark a leap—it turned Cambricon’s funds round, restoring confidence that the corporate may ship commercially viable merchandise.

Now all eyes are on the MLU 690, at present in improvement. Trade chatter suggests it may method, and even rival, Nvidia’s H100 in some metrics. Anticipated upgrades embrace denser compute cores, stronger reminiscence bandwidth, and additional refinements in FP8 assist. If profitable, it could catapult Cambricon from “home different” standing to a real competitor on the world frontier.

Cambricon nonetheless faces hurdles: its chips aren’t but produced on the identical scale as Huawei’s or Alibaba’s, and previous instability makes consumers cautious. However symbolically, its comeback issues. As soon as dismissed as a struggling startup, Cambricon is now seen as proof that China’s home chip path can yield worthwhile, high-performance merchandise.

A Geopolitical Tug-of-Struggle

At its core, the battle over Nvidia’s place in China isn’t actually about teraflops or bandwidth. It’s about management. Washington sees chip restrictions as a option to shield national security and gradual Beijing’s advance in AI. Beijing sees rejecting Nvidia as a option to scale back strategic vulnerability, even when it means briefly dwelling with much less highly effective {hardware}.

China’s large 4 contenders, Huawei, Alibaba, Baidu, and Cambricon, together with different smaller gamers reminiscent of Biren, Muxi, and Suiyuan, don’t but provide the true substitutes. Most of their choices are barely comparable with A100, Nvidia’s greatest chips 5 years in the past, and they’re working to meet up with H100, which was obtainable three years in the past.

Every participant can be bundling its chips with proprietary software program and stacks. This method may power Chinese language builders accustomed to Nvidia’s CUDA to spend extra time adapting their AI models which, in flip, may have an effect on each coaching and inference.

DeepSeek’s improvement of its subsequent AI mannequin, for instance, has reportedly been delayed. The primary reason seems to be the corporate’s effort to run extra of its AI coaching or inference on Huawei’s chips.

The query will not be whether or not Chinese language firms can construct chips—they clearly can. The query is whether or not and after they can match Nvidia’s mixture of efficiency, software program assist, and belief from end-users. On that entrance, the jury’s nonetheless out.

However one factor is for certain: China not needs to play second fiddle on this planet’s most vital know-how race.

From Your Web site Articles

Associated Articles Across the Internet