The hunt is on for something that may surmount AI’s perennial memory wall–even fast fashions are slowed down by the point and power wanted to hold knowledge between processor and reminiscence. Resistive RAM (RRAM)may circumvent the wall by permitting computation to occur within the reminiscence itself. Sadly, most varieties of this nonvolatile memory are too unstable and unwieldy for that objective.

Happily, a possible resolution could also be at hand. At December’s IEEE International Electron Device Meeting (IEDM), researchers from the College of California, San Diego confirmed they might run a studying algorithm on a wholly new sort of RRAM.

“We truly redesigned RRAM, fully rethinking the way in which it switches,” says Duygu Kuzum, {an electrical} engineer on the College of California, San Diego, who led the work.

RRAM shops knowledge as a stage of resistance to the stream of present. The important thing digital operation in a neural community—multiplying arrays of numbers after which summing the outcomes—might be completed in analog just by operating present by way of an array of RRAM cells, connecting their outputs, and measuring the ensuing present.

Historically, RRAM shops knowledge by creating low-resistance filaments within the higher-resistance surrounds of a dielectric materials. Forming these filaments typically wants voltages too excessive for traditional CMOS, hindering its integration inside processors. Worse, forming the filaments is a loud and random course of, not perfect for storing knowledge. (Think about a neural community’s weights randomly drifting. Solutions to the identical query would change from someday to the subsequent.)

Furthermore, most filament-based RRAM cells’ noisy nature means they should be remoted from their surrounding circuits, often with a selector transistor, which makes 3D stacking tough.

Limitations like these imply that conventional RRAM isn’t nice for computing. Particularly, Kuzum says, it’s tough to make use of filamentary RRAM for the form of parallel matrix operations which are essential for at present’s neural networks.

So, the San Diego researchers determined to dispense with the filaments solely. As an alternative they developed gadgets that swap a complete layer from excessive to low resistance and again once more. This format, referred to as “bulk RRAM”, can put off each the annoying high-voltage filament-forming step and the geometry-limiting selector transistor.

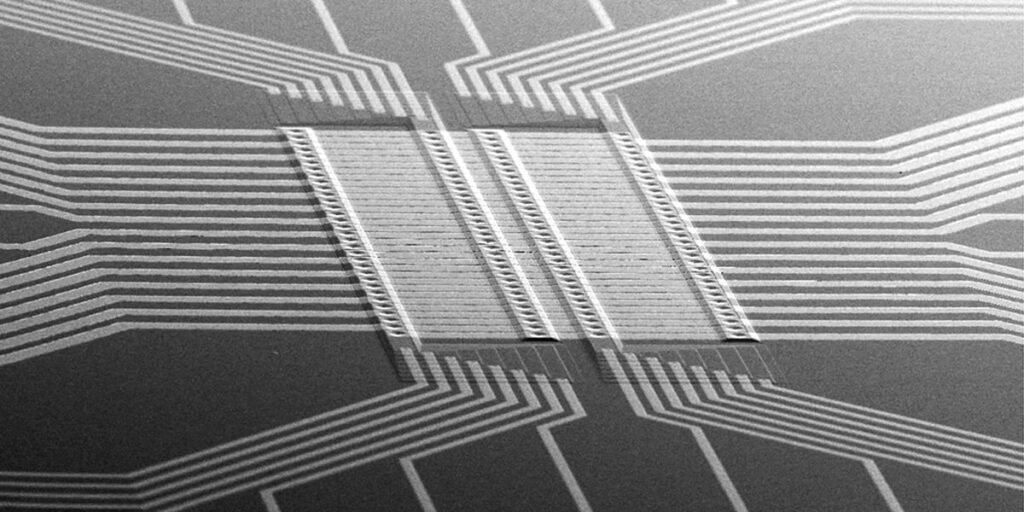

The San Diego group wasn’t the primary to construct bulk RRAM gadgets, however it made breakthroughs each in shrinking them and forming 3D circuits with them. Kuzum and her colleagues shrank RRAM into the nanoscale; their system was simply 40 nm throughout. In addition they managed to stack bulk RRAM into as many as eight layers.

With a single pulse of an identical voltage, an eight-layer stack of cells every of which might take any of 64 resistance values, a quantity that’s very tough to realize with conventional filamentous RRAM. And whereas the resistance of most filament-based cells are restricted to kiloohms, the San Diego stack is within the megaohm vary, which Kuzum says is healthier for parallel operations. e

“We will truly tune it to anyplace we wish, however we expect that from an integration and system-level simulations perspective, megaohm is the fascinating vary,” Kuzum says.

These two advantages–a larger variety of resistance ranges and the next resistance–may enable this bulk RRAM stack to carry out extra complicated operations than conventional RRAM’s can handle.

Kuzum and colleagues assembled a number of eight-layer stacks right into a 1-kilobyte array that required no selectors. Then, they examined the array with a continuous studying algorithm: making the chip classify knowledge from wearable sensors—for instance, studying knowledge from a waist-mounted smartphone to find out if its wearer was sitting, strolling, climbing stairs, or taking one other motion—whereas continually including new knowledge. Exams confirmed an accuracy of 90 %, which the researchers say is akin to the efficiency of a digitally-implemented neural community.

This check exemplifies what Kuzum thinks can particularly profit from bulk RRAM: neural community fashions on edge gadgets, which can must be taught from their atmosphere with out accessing the cloud.

“We’re doing quite a lot of characterization and materials optimization to design a tool particularly engineered for AI functions,” Kuzum says.

The power to combine RRAM into an array like this can be a vital advance, says Albert Talin, supplies scientist at Sandia National Laboratories in Livermore, California, and a bulk RRAM researcher who wasn’t concerned within the San Diego group’s work. “I believe that any step by way of integration could be very helpful,” he says.

However Talin highlights a possible impediment: the power to retain knowledge for an prolonged time period. Whereas the San Diego group confirmed their RRAM may retain knowledge at room temperature for a number of years (on par with flash memory), Talin says that its retention on the greater temperatures the place computer systems truly function is much less sure. “That’s one of many main challenges of this expertise,” he says, particularly in terms of edge functions.

If engineers can show the expertise, then all varieties of fashions could profit. This reminiscence wall has solely grown greater this decade, as conventional reminiscence hasn’t been in a position to sustain with the ballooning calls for of huge fashions. Something that enables fashions to function on the reminiscence itself might be a welcome shortcut.

From Your Website Articles

Associated Articles Across the Net